AI Threats Detection

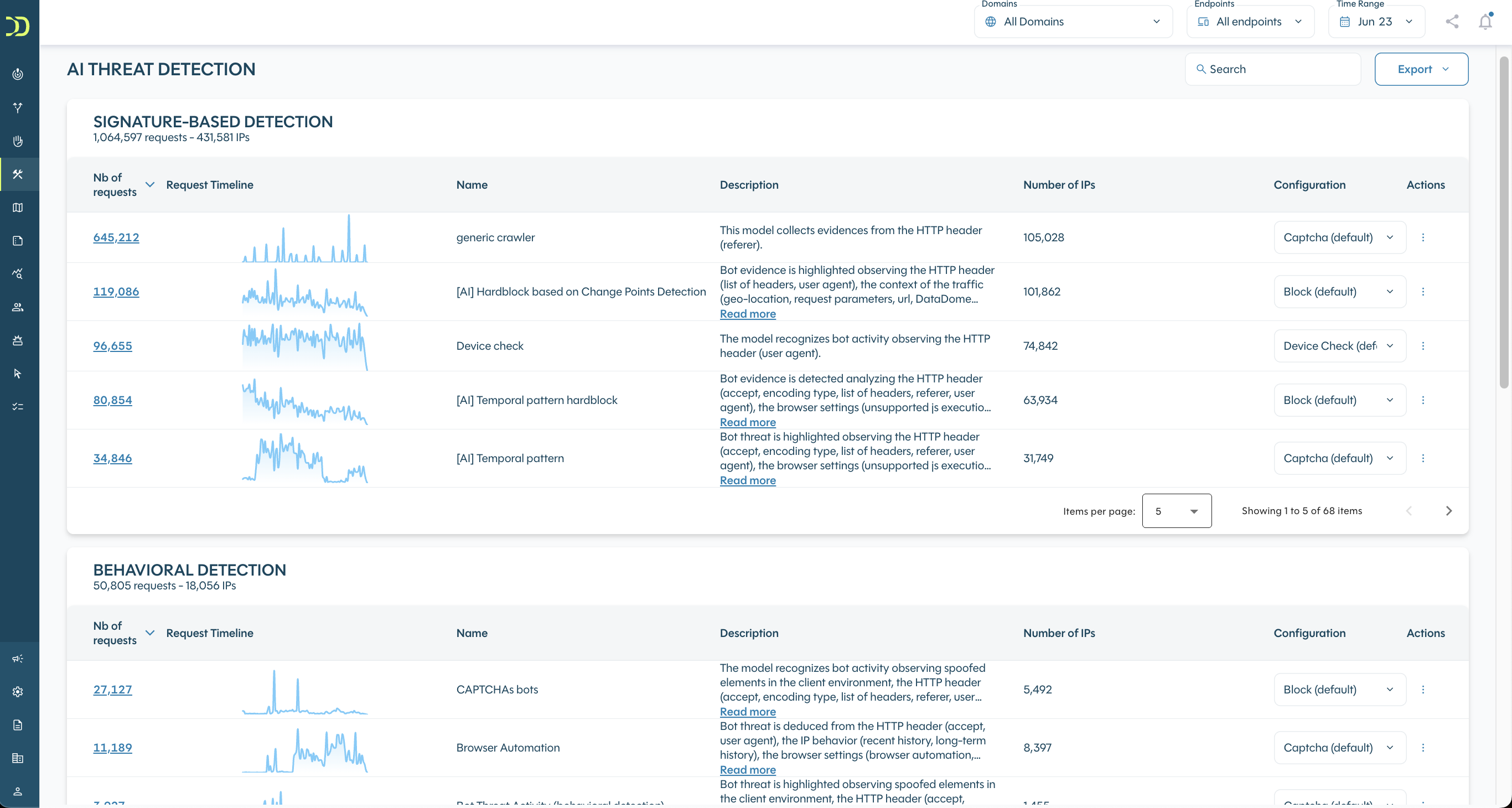

The "AI Threats Detection" section provides you with an overview and the ability to manage the models used by DataDome to protect you from threats.

1. Overview

DataDome threat detection models are supported by Machine Learning techniques and constantly updated.

All the models are ordered into 4 different categories to provide information about detection mechanisms:

- Signature-Based detection

Models using signatures detection capabilities that can identify malicious traffic. These signatures can be static or dynamic (generated with ML). They leverage fingerprinting, such as TLS fingerprint, browser fingerprint, and HTTP header - Behavioral detection

Models detecting threats based on abnormal/aggressive behavior. Bots in these categories have been detected by behavioral rules and ML that identified a behavior not linked to human activity, e.g. too many login attempts. - Reputational detection

Models detecting threats because their request originated from an IP that acted maliciously recently, or because the IP has been flagged as a (data center/residential) proxy recently by our ML models. Note that since several IPs can be shared, we also combine IP reputation with other criteria to have a more fine-grain detection. - Vulnerability Scanner detection

Models detecting threats that systematically tally, catalog, and examine identifiable, predictable, and unknown content locations, paths, file names, and parameters, in order to find weaknesses and possible security vulnerabilities.

2. Specific and general models

Some models are built to detect threats in restricted contexts and only apply on endpoints with specific traffic usages (login, account creation, add to cart, forms, payment). Other models are generic and apply to all endpoints.

For this reason, it is very important to carefully define endpoints while setting up DataDome account.

3. Models management

Allow responseAllow response is not supported for AI Threats Detection models, please use the disable feature instead.

You can access the list of models and manage the response for each of them. For AI Threats Detection, only 3 types of Responses can be set:

- Captcha

- Block

- Device Check

The default response suggested by DataDome is indicated in the list.

Learn more about all the responses

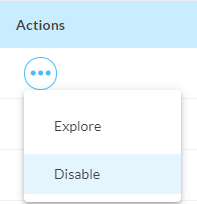

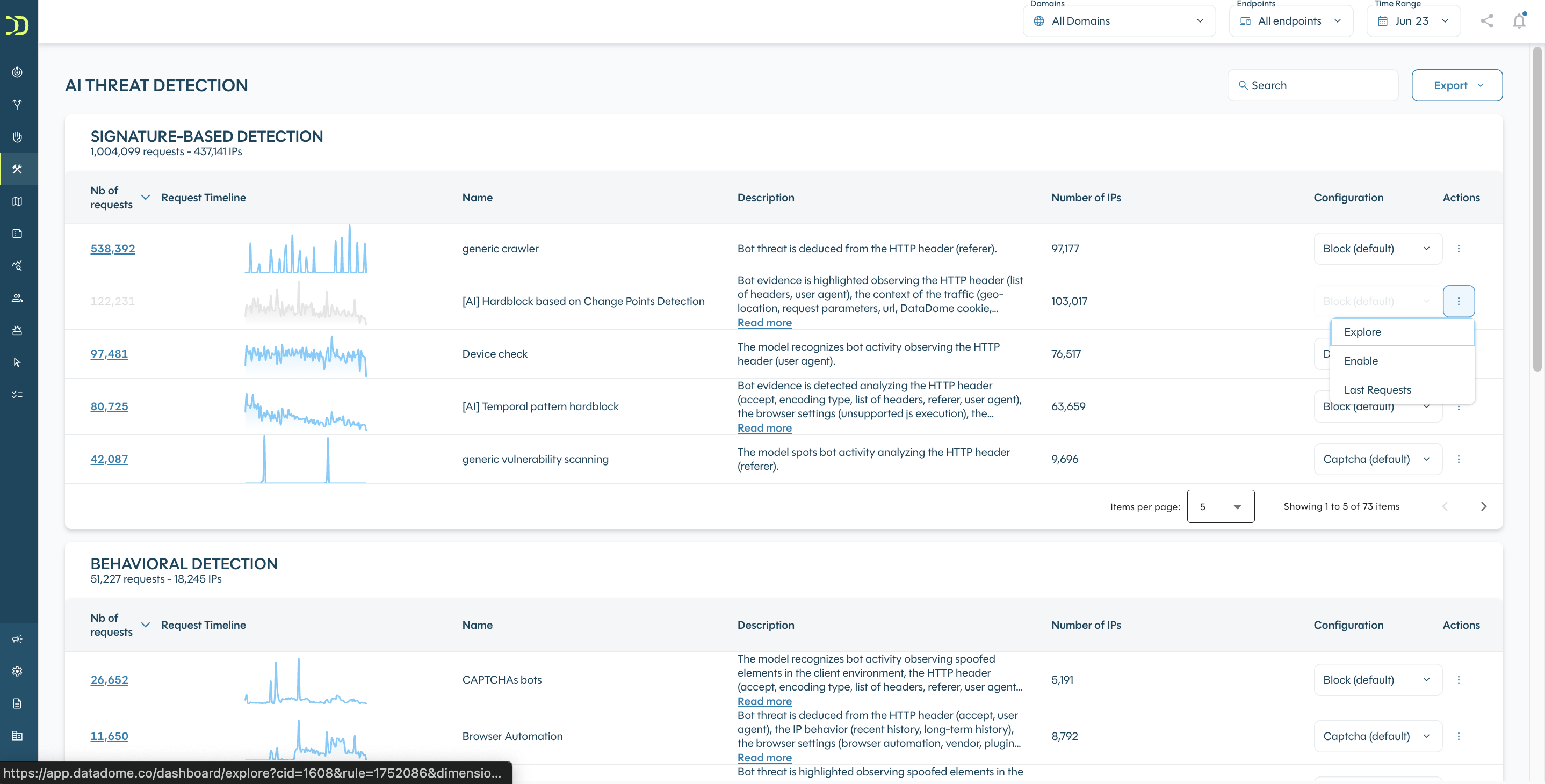

Also, you can disable or enable any model, just go over the Actions menu to do it:

Model disabledWhen a model is disabled, it won't match any traffic anymore on the associated criteria and the corresponding response will be deactivated. The traffic will still be monitored by other models.

After the model is disabled, you will still be able to access the last requests that match it by hitting the Action menu.

Also, you can enable it again at any time.

4. Models definition

Signature-Based detection

UserAgent Library

Bots using the popular UserAgent Library.

Fake Browsers and Fake Browser Login

Bots that modified their user-agent as well as other HTTP headers to appear human/like a legitimate human browser.

UserAgent Crawler

Bots using the popular UserAgent Crawler.

Inconsistent HTTP headers

Bots that improperly forged their HTTP headers.

Headless Browser Forged Fingerprint

Headless browsers (Chrome/Firefox/Safari) instrumented with frameworks such as Puppeteer/Selenium/Playwright that modified their browser fingerprint to try to appear human.

UserAgent Browser Automation

Bots using the popular UserAgent Browser Automation.

Puppeteer Extra Stealth

Bots based on (Headless) Chrome and instrumented with the Puppeteer Extra Stealth plugin

Scraping (signature detection)

Scrapers detected because they have an inconsistent browser fingerprint.

UserAgent linux command

Bots using the popular UserAgent Library.

saasCrawlers

Bot signature that are linked to Scraping SaaS/ Bot as a service

Reputational detection

Bad IP reputation (data center IPs)

Bots that operate from data center IPs that were recently flagged as malicious by DataDome’s detection engine.

Bad IP reputation

Bots that operate from IP addresses with a bad reputation.

Residential proxies

Bots that route their traffic through residential proxies.

Free proxies IPs

Bots that route their traffic through free proxies (proxies freely listed on the Internet)

Shared data center proxies

Bots that route their traffic through IPs that have been identified as data center proxies.

Updated 3 months ago