Integrate via Terraform

Installation with Terraform

Prerequisites

DATADOME_SERVER_SIDE_KEYavailable in your DataDome dashboard,DATADOME_CLIENT_SIDE_KEYavailable in your DataDome dashboard,- up-to-date Terraform,

CLOUDFLARE_API_TOKENfor Workers script and routes edits following this guide,CLOUDFLARE_ZONE_IDavailable in your Domain Overview page in Cloudflare,CLOUDFLARE_ACCOUNT_IDavailable in your Domain Overview page in Cloudflare.

Protect your traffic

- Download the latest version of our Cloudflare Worker script.

- Create an empty terraform file, for example

datadome_worker.tfand paste the following code:

terraform {

required_providers {

cloudflare = {

source = "cloudflare/cloudflare"

version = "~> 5"

}

}

}

provider "cloudflare" {

api_token = "<CLOUDFLARE_API_TOKEN>" # update value

}

variable "account_id" {

default = "change_me"

}

variable "datadome_server_side_key" {}

variable "datadome_client_side_key" {}

resource "cloudflare_worker" "datadome_worker" {

account_id = var.account_id

name = "datadome_worker"

}

resource "cloudflare_workers_route" "catch_all_route" {

zone_id = "<CLOUDFLARE_ZONE_ID>" # update value

pattern = "<CLOUDFLARE_ROUTE_PATTERN>" # update value https://developers.cloudflare.com/workers/configuration/routing/routes/

script = cloudflare_worker.datadome_worker.name

}

resource "cloudflare_worker_version" "datadome_worker_version" {

account_id = var.account_id

worker_id = cloudflare_worker.datadome_worker.id

compatibility_date = "2025-04-14"

main_module = "datadome.js"

modules = [{

content_file = "dist/datadome.js" # update value

content_type = "application/javascript+module"

name = "datadome.js"

}]

bindings = [

{

name = "DATADOME_SERVER_SIDE_KEY"

text = var.datadome_server_side_key

type = "secret_text"

},

{

name = "DATADOME_CLIENT_SIDE_KEY"

text = var.datadome_client_side_key

type = "secret_text"

}

]

}

resource "cloudflare_workers_deployment" "datadome_worker_deployment" {

account_id = var.account_id

script_name = cloudflare_worker.datadome_worker.name

strategy = "percentage"

versions = [{

percentage = 100

version_id = cloudflare_worker_version.datadome_worker_version.id

}]

}terraform {

required_providers {

cloudflare = {

source = "cloudflare/cloudflare"

version = "~> 4"

}

}

}

provider "cloudflare" {

api_token = "<CLOUDFLARE_API_TOKEN>" # update value

}

variable "datadome_server_side_key" {}

variable "datadome_client_side_key" {}

resource "cloudflare_worker_route" "catch_all_route" {

zone_id = "<CLOUDFLARE_ZONE_ID>" # update value

pattern = "<CLOUDFLARE_ROUTE_PATTERN>"# update value https://developers.cloudflare.com/workers/configuration/routing/routes/

script_name = cloudflare_worker_script.datadome_script.name

}

resource "cloudflare_worker_script" "datadome_worker" {

account_id = "<CLOUDFLARE_ACCOUNT_ID>" # update value

name = "datadome_worker"

content = file("<PATH_TO_THE_DATADOME.TS_FILE") # update value

secret_text_binding {

name = "DATADOME_SERVER_SIDE_KEY"

text = var.datadome_server_side_key

}

secret_text_binding {

name = "DATADOME_CLIENT_SIDE_KEY"

text = var.datadome_client_side_key

}

}- Update it with your personal values.

- Create the secret for

datadome_server_side_keyto hold the value of your DataDome server-side key you can find in your DataDome Dashboard with:

export TF_VAR_datadome_server_side_key=<YOUR DATADOME_SERVER_SIDE_KEY>- Create the secret for

datadome_client_side_keyto hold the value of your DataDome client-side key you can find in your DataDome Dashboard with:

export TF_VAR_datadome_client_side_key=<YOUR DATADOME_CLIENT_SIDE_KEY>- Run

terraform init- Run

terraform planTwo ressources will be created: the Worker script and the Worker route.

- Run

terraform applyCongrats! You can now see your traffic in your DataDome dashboard.

Configuration

The configuration is done inside the script, using constants.

Server-side settings

| Setting name in Worker's code | Setting name in package | Description | Required | Default value | Example |

|---|---|---|---|---|---|

| DATADOME_SERVER_SIDE_KEY | serverSideKey | Your DataDome server-side key, found in your dashboard. | Yes | - | - |

| DATADOME_TIMEOUT | timeout | Request timeout to DataDome API, in milliseconds. | No | 300 | 350 |

| DATADOME_URL_REGEX_EXCLUSION | urlPatternExclusion | Regular expression to exclude URLs from the DataDome analysis. | No | List of excluded static assets below | - |

| DATADOME_URL_REGEX_INCLUSION | urlPatternInclusion | Regular expression to only include URLs in the DataDome analysed traffic. | No | - | /login*/i |

| DATADOME_IP_EXCLUSION | ipExclusion | List of IPs which traffic will be excluded from the DataDome analysis. | No | - | ["192.168.0.1", "192.168.0.2"] |

| DATADOME_LOGPUSH_CONFIGURATION | logpushConfiguration | List of Enriched headers names to log inside Logpush. | No | - | ["X-DataDome-botname", "X-DataDome-captchapassed", "X-DataDome-isbot"] |

| DATADOME_ENABLE_GRAPHQL_SUPPORT | enableGraphQLSupport | Extract GraphQL operation name and type on request to a /graphql endpoint to improve protection. | No | false | true |

| DATADOME_ENABLE_DEBUGGING | enableDebugging | Log in Workers logs detailed information about the DataDome process. | No | false | true |

/\.(avi|flv|mka|mkv|mov|mp4|mpeg|mpg|mp3|flac|ogg|ogm|opus|wav|webm|webp|bmp|gif|ico|jpeg|jpg|png|svg|svgz|swf|eot|otf|ttf|woff|woff2|css|less|js|map)$/iClient-side settings

| Setting name in Worker's code | Setting name in package | Description | Required | Default value | Example |

|---|---|---|---|---|---|

| DATADOME_CLIENT_SIDE_KEY | clientSideKey | Your DataDome client-side key, found in your dashboard. | Yes | - | - |

| DATADOME_JS_URL | jsUrl | URL of the DataDome JS tag that can be changed to include the tag as a first party. | No | https://js.datadome.co/tags.js | https://ddfoo.com/tags.js |

| DATADOME_JS_ENDPOINT | jsEndpoint | Endpoint of the DataDome JS Tag. | No | ||

| DATADOME_JS_TAG_OPTIONS | jsTagOptions | SON object describing DataDome JS Tag options. | No | { "ajaxListenerPath": true } | { "ajaxListenerPath": "example.com", "allowHtmlContentTypeOnCaptcha": true } |

| DATADOME_JS_URL_REGEX_EXCLUSION | jsUrlRegexExclusion | Regular expression to NOT set the DataDome JS Tag on matching URLs. | No | - | - |

| DATADOME_JS_URL_REGEX_INCLUSION | jsUrlRegexInclusion | Regular expression to set the DataDome JS Tag on matching URLs. | No | - | /login*/i |

Update with Terraform

- Download the latest version of our Cloudflare Worker script.

- Paste the content of the

datadome.tsfile into the file used for the content of the script of your Worker. - Run

terraform plan1 ressource will be changed.

- Run

terraform applyUninstallation with Terraform

To delete the DataDome Worker and its script, run from the location of your datadome_worker.tf and terraform.tfstate:

terraform destroy -target cloudflare_worker_script.datadome_workerLogging

DatDome custom logging

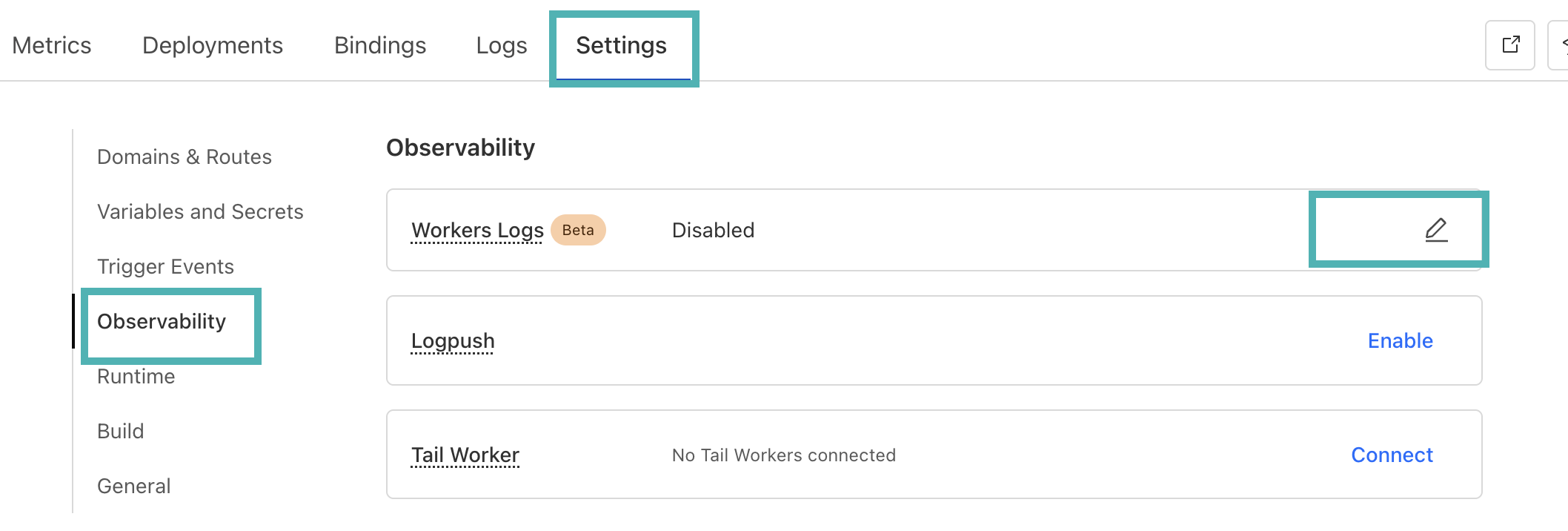

- Inside the Cloudflare Dashboard, go to the DataDome Worker's page.

- Click onSettings, go to the Observability section.

- Click on the pen icon next to Workers Logs.

- Enable logs.

- Click on Deploy.

- You will see the logs inside the Logs tab.

By default, DataDome logs errors only (such as errors in the configuration). If you want to have detailed logs for debugging, you can setDATADOME_ENABLE_DEBUGGINGtotrue.

DataDome logs format

The DataDome custom logs have the following format:

{

"step": "string",

"result": "string",

"reason": "string",

"details": {

"key": "value"

},

"company": "DataDome",

"line": 123

}Logpush

You can use Logpush to send logs to a destination supported by Logpush (Datadog, Splunk, S3 Bucket…).

Cloudflare planLogpush is available to customers on Cloudflare’s Enterprise plan.

Update the Worker’s script

- Fill the

DATADOME_LOGPUSH_CONFIGURATIONvalue with the name of the values you want, as an Array of Strings.

The possible values are available in the Enriched headers page.

Eg:

DATADOME_LOGPUSH_CONFIGURATION = "["X-DataDome-botname", "X-DataDome-isbot", "x-datadomeresponse"]"Enable Logpush

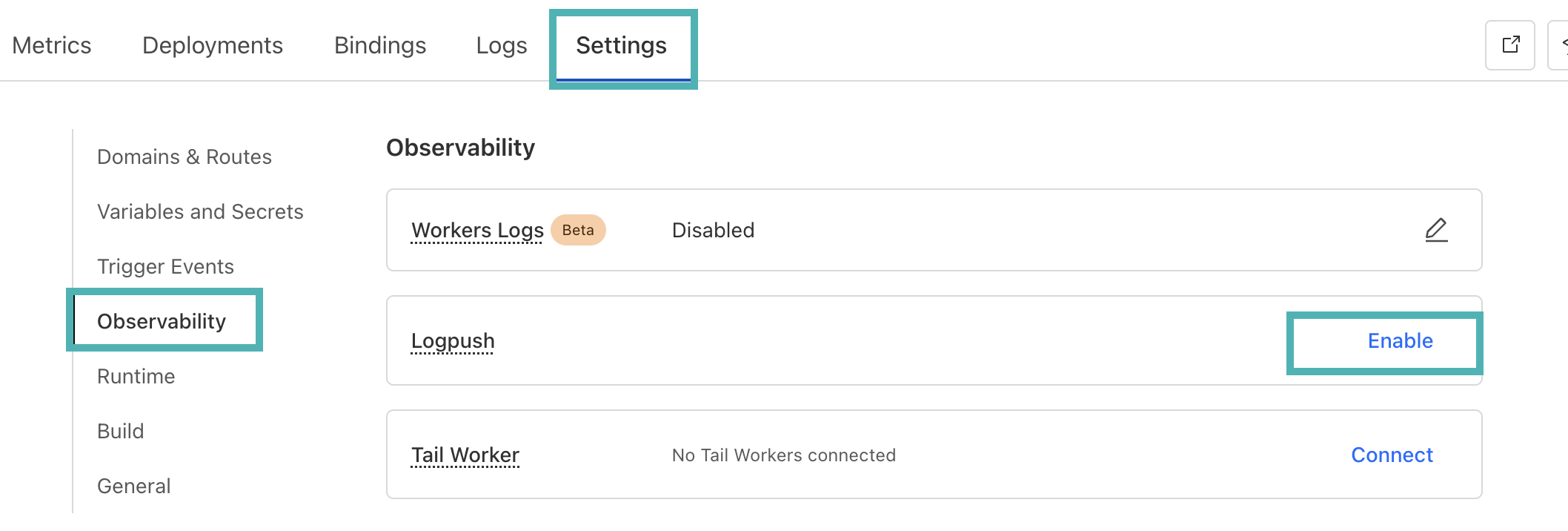

- Inside the Cloudflare Dashboard, go to the DataDome Worker's page.

- Click onSettings, go to the Observability section.

- Click on Enable next to Logpush.

Add custom fields

Requires version 2.2.0 of the CloudflareWorker module.

Custom fields featureDataDome let you enrich in real time our detection engine by sending us some custom fields with your business data. These fields can be used for specific detection models.

👋 Please reach out to our support team for reviewing the data received.

Sample a callback function to set custom fields dynamically

The callback is a function receiving a single parameter, the Cloudflare Http request - defined here:

// Sample code for custom fields

// Editing directly the datadome.js file

var DATADOME_CUSTOM_FIELD_STRING_1 = function (request) {

if (request.headers['x-user-tier'] && request.headers['x-user-tier'][0] && request.headers['x-user-tier'][0].value) {

return request.headers['x-user-tier'][0].value;

} else {

return 'standard';

}

};// Sample code for custom fields

// when calling activateDataDome

const dataDomeHandler = activateDataDome(myHandler, {

serverSideKey: env.DATADOME_SERVER_SIDE_KEY,

clientSideKey: env.DATADOME_CLIENT_SIDE_KEY,

customFieldString1: function (request) {

if (request.headers['x-user-tier'] && request.headers['x-user-tier'][0]

&& request.headers['x-user-tier'][0].value) {

return request.headers['x-user-tier'][0].value;

} else {

return 'standard';

}

},

// ...other options

});

Updated 3 months ago